The modern world is increasingly a digital one that encompasses the realm of electronic devices, the internet, and online platforms. This world is constantly evolving, driven by technological advancements and shaped by how humans interact with digital technologies.

The key element of a digital world is information that needs to be collected, stored and processed in vast quantities. For many years this has been done by the high-performance computers (HPC), often fully custom and purpose-built. The breakthrough in artificial intelligence (AI) machine learning and large-language models has brought a new way of handling information. In a short time, it became clear there is a need for high-scale AI compute systems which have much in common with HPC, and both should be widely available for users and companies implementing digital transformation.

The key industry players started working on unifying and standardizing the approach on how the AI/HPC systems are built, specifically focusing on common approaches in connectivity for scaling, namely:

- Die-to-die interfaces and networks on chip

- Chip-to-chip, chip-to-module interfaces

- Connectivity within a rack (scale-up)

- Connectivity for multi-rack and cluster level (scale-out)

- Data center interconnect (DCI) and medium/long-haul connectivity

This involves physical, logical and transport layers, semantics for the common packet delivery and management APIs, etc. Along with that comes protection for data in transit often referred to as network security.

This blog focuses on discussing networks security for Ethernet-based AI/HPC connectivity, which are scale-out networks and DCI. There is a proposal to adopt Ethernet for scale-up connectivity, while this is outside of this article.

Building blocks for AI/HPC

A basic element of AI/HPC application is a compute node: a set of CPUs tightly coupled with a set of GPUs or computing units that are located either within one large multi-die package or as separate components connected on a board, typically via PCI/CXL interconnects or proprietary chip-to-chip interfaces.

A compute rack combines multiple compute nodes by connecting them with relatively short-distance high-speed and low-latency links (so called scale-up network). These links can be based on proprietary technologies, or standard PCIe/CLX (through the cable/retimer/switch) or new initiatives like UALink.

To make such a basic system complete, a compute rack shall be efficiently connected to storage as well as to the user (or management plane). Such access is provided by a few highly featured network interface cards (NIC), which are also called SmartNICs or data processing units (DPU). Typically, SmartNIC is a complex SoC which has few PCIe interfaces for the host side and 1-2 high-speed Ethernet ports on the network side and basically is a network connectivity server on a chip. SmartNIC contains dedicated hardware as well as a cluster of CPUs to accelerate network protocols for storage, remote direct memory access (RDMA), security, virtualization, etc. Today’s SmartNICs provide 400G and 800G Ethernet ports. The next generation products are going to support 1.6T Ethernet.

A large-scale AI/HPC cluster consists of 100s or even 1000s of compute racks that are interconnected through high throughput, low latency, predictable and reliable connectivity. Such networks are called scale out networks, for which silicon vendors and system companies are extending the SmartNIC features and connecting them to fabrics at the heart of which are the purpose-build switch ASICs that deliver unprecedented throughput, port density, and low latency combined with the advanced telemetry and packet forwarding intelligence. Today’s scale out networks partially use proprietary technologies, while the industry trend is to go to all Ethernet with the improved and standardized transport layer protocol.

Network security for AI/HPC

AI/HPC clusters process a tremendous volume of valuable data and have already become a critical element of the modern infrastructure and therefore must be protected at all levels that are exposed to potential threats. Network security is one of the key components aiming to provide:

- Access control – allowing only the authorized nodes or users to access the compute systems

- Data confidentiality – encrypting data on the wire and protecting the encryption keys from being recovered

- Data isolation – using different secure domains and encryption keys for data belonging to different applications, jobs, or users

- Threat detection – ability to identify manipulations with the data and critical network headers, identify packets being replayed, delayed, etc.

Because of the scale and cost of each AI/HPC system, there is a need to support high level of utilization for return on investment. This requires the network security feature to keep up with the overall AI/HPC system goals:

- High throughput, ideally full line rate

- Minimal impact on latency

- Efficiently support the system scale (number of ports, nodes, secure domains)

- Have reasonable cost of silicon area and power

The good news is that network security protocols like MACsec, IPsec and their custom adjustments have been used in high-speed networking for more than a decade and proven to be economically viable. On the other hand, updating the features or introducing new types of protocols to support new AI/HPC use cases is inevitable and is discussed in subsequent sections.

MACsec and IPsec

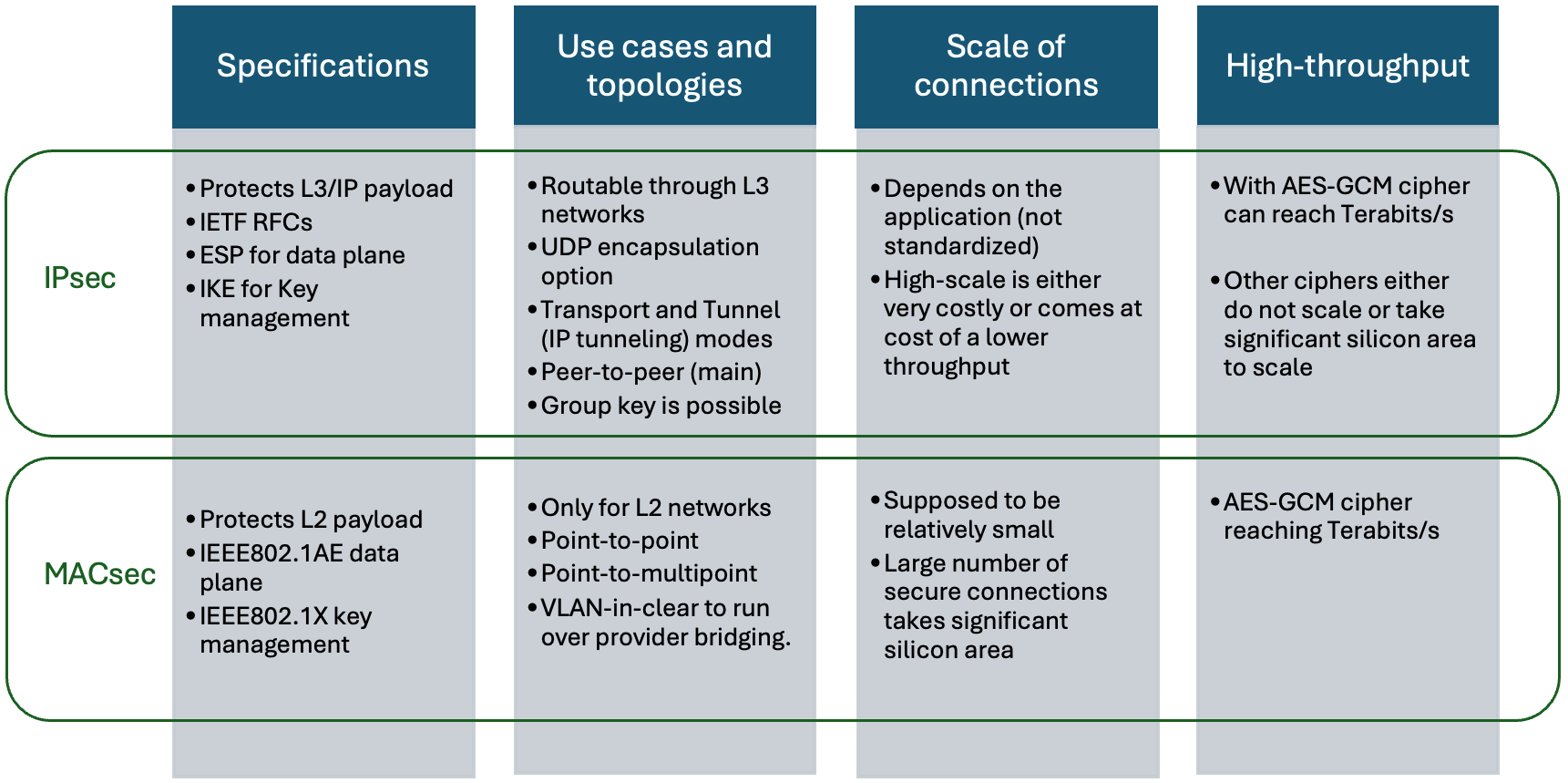

MACsec and IPsec are well-established network security protocols that are widely used to protect data traffic in applications ranging from automotive, industrial, 5G to high-speed data center and enterprise applications.

The figure below gives an overview of the key properties of MACsec and IPsec.

IPsec was defined to protect L3 payload and is widely used for protecting site-to-site, VM-to-VM connectivity, and it is at the foundation of hybrid cloud security, etc. IPsec is also used in scale-out networks but requires a special approach due to native limitations of IPsec architecture (specifically if many security tunnels are required).

MACsec was defined to protect L2 payload and therefore its use is mainly in protecting direct connections from port-to-port, hop-to-hop or connections through service providers.

Both MACsec and IPsec, due to the use of AES-GCM cipher, can support terabits of throughput. Nevertheless, these protocols lack features and scalability required for scale out networks. These limitations are now addressed by network security activities within Ultra Ethernet Consortium.

Ultra Ethernet Consortium

The Ultra Ethernet Consortium (UEC) was set up in 2023 by leading companies in HPC and connectivity with the goal of enhancing Ethernet’s capabilities through standardizing a high-performance Ethernet stack purpose-built for the unique demands of AI/HPC.

UEC specification v1.0 that was publicly released in June 2025 defines a transport protocol that provides the ability to deliver data straight from the network and into application memory and vice versa, without software involvement. This method is known as “Remote DMA”. The new transport protocol brings numerous enhancements to the current, widely used RoCE (RDMA over converged network) and both are going to co-exist for some time.

For transport protocol protection, UEC defined a Transport Security Sub-layer (TSS), which leverages concepts from IPsec and PSP (Google’s open-source security protocol) to efficiently support multiple use cases at scale. The TSS is a new protocol, therefore it is not compatible with any of the previously defined protocols, while the implementation is going to have a lot in common with line-rate MACsec and IPsec solutions.

The figure below gives an overview of the key properties of UEC TSS.

Adding UEC security for AI/HPC

TSS protocol is defined in such a way that it is applied to protect the payload and optionally authenticate network headers, leaving the routing and load balancing information accessible.

Source: UEC specification

TSS also allows leaving parts of the UEC transport headers in clear for ease of inspection and debugging. This means that fabric switches do not need to deal with the TSS layer, and its presence in the packet is transparent for the network operation. Therefore, TSS is going to be added only to SmartNICs, and the market is going to have two flavors of Smart NICs: UEC-only (requiring only TSS for security) and UEC+RoCE (requiring both TSS and IPsec).

Conclusions

MACsec and IPsec security protocols with AES-GCM cipher have been used in high-speed networking for some time and have proven to be economically viable, while protection of AI/HPC brings new use cases that are partially addressed by adjusting the IPsec protocol usage, while in the future are properly addressed by Ultra Ethernet-based solutions.

UEC TSS is an AI/HPC focused security protocol developed for protecting scale-out networks running over the dedicated fabrics. The TSS protocol is going to be integrated into high-performance SmartNICs with 800G and 1.6T Ethernet ports.

IPsec continues to be used for protecting RoCE protocol, virtual networks and management traffic due to its broader compatibility and mature ecosystem.

MACsec continues to be used for protecting the DCI links running via mid/long haul optical connectivity. The trend is to integrate MACsec into the coherent DSP ASIC located inside the optical transceiver modules supporting 800G and 1.6T Ethernet.

Additional links

- Rambus 1.6T MACsec Protocol Engine

- Network Security at Terabit-per-second Rates with MACsec, IPsec and UEC webinar

The post Network Security For AI/HPC: From MACsec/IPsec Towards Ultra Ethernet appeared first on Semiconductor Engineering.